Well, my home storage system was running out of space. Looking at my pretty LibreNMS graphs, I would probably be at 100% utilization within 6 months. My existing setup, a Core i7 870 tower system with an nVidia GT 630 graphics card stuffed with 5 x 2TB drives. This setup was a psuedo hyperconverged system for me, since it housed my virtual machines and storage. A throwback to my gaming desktop days that morphed into my home server and virtualization/storage platform.

The goal was to find something that was low power, allowed me to tinker around, and wouldn't cause me hardware headaches with incompatibilties. Since I'm a linux guy, I like to stick as close to Intel as possible for things like NICs. This usually rules out AMD motherboards. I bagan my research and fell in love with the Avoton/Rangely line of Atoms. These aren't the Atoms of old. Availble in 4 and 8 core versions, these things are little beasts. Especially at 14w to 20w TDP.

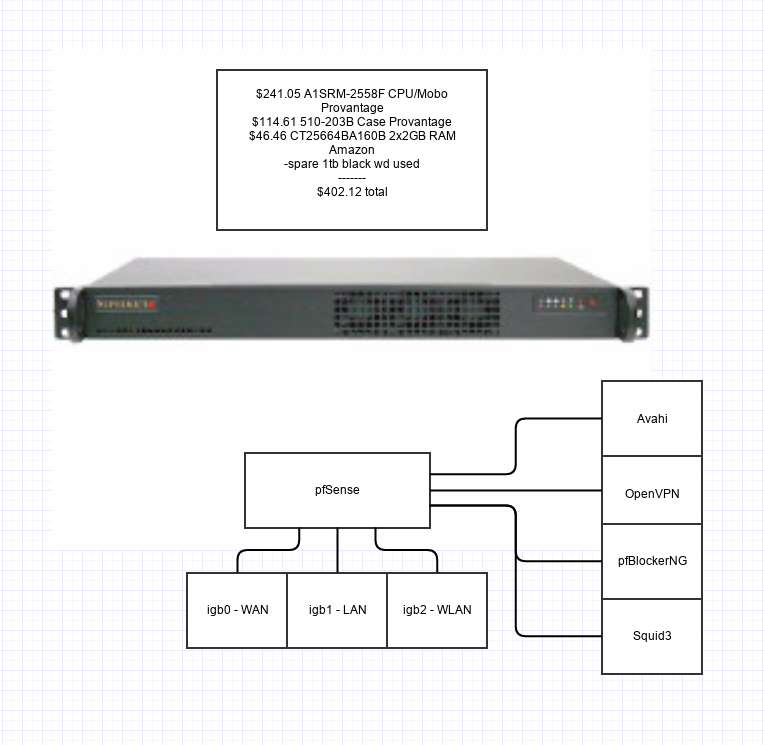

My first task was to get an Avoton/Rangley into the home to tinker with, see how they handle virtulization, and so on. Since I had been wanting to move my pfSense install out of the virtual world and give it a proper build, I opted to grab a c2558 Supermicro board. The Rangley side of these Atoms supports AES-NI as well as Intel Quickassist. Gonzopancho(from pfSense) posted up IPSec benchmarks of his Rangley systems and was able to achieve just about line rate on a gigabit connection. And that's without Intel Quickassist currently. Before finalizing my pfSense install, I loaded up a couple linux distros to play around with. This thing was a beast! In all honesty, it was probably slightly slower "in feel" than my core i7. All said and done, $400ish for a pfSense box that could, according to Gonzo's testing, pull a gig of IPSec encrypted traffic @ 14 watts TDP roughly. Not bad at all.

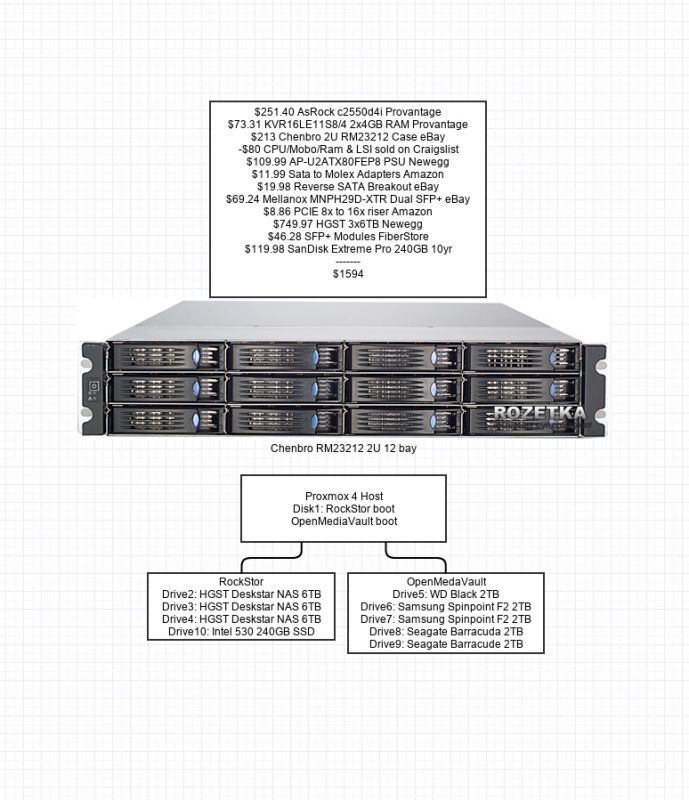

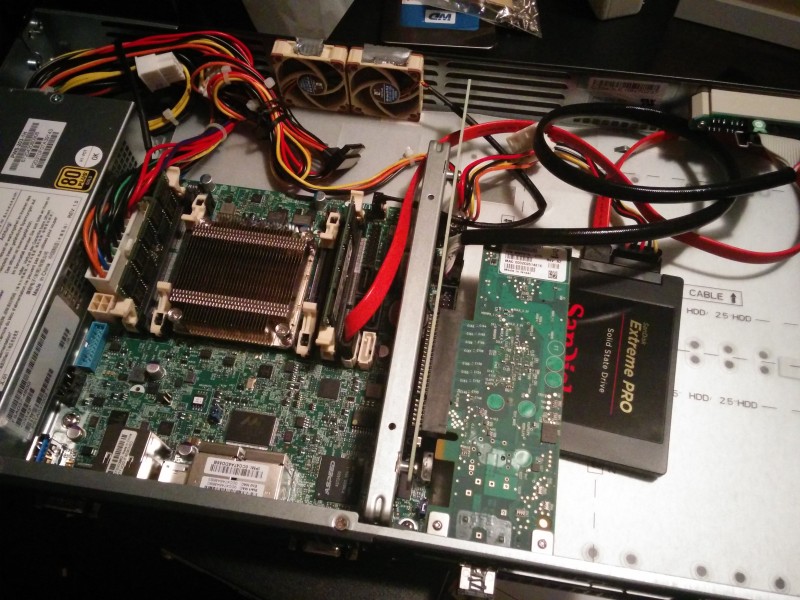

Now for my storage that was filling up. I previously had everything virtualized, in a psuedo hyperconverged manner. All virtualization, storage and networking were basically on my Core i7 desktop tower. I wanted to split this going forward into more of a proper virtualization environment. One with a storage backend and hypervisors for virtual machines. I opted to grab the c2550 version for my storage build since it has a turbo mode in lieu of Intel Quickassist on the c2558. I found a smokin deal on Chenbro 12 bay chassis on ebay, threw the asrock c2550 board in it with 8 gb's of ram and a mellanox dual port SFP+ card. I was a little worried with sound levels since the previous Xeon board that the server came with was a screamer! After throwing the AsRock board in it, it actually had some sane fan speed levels in the bios. The sound wasn't actually that bad either. Reminds me of a desktop system from the 90s. So low, I've got it sitting in a closet in a room next to my toddler. And she has no issues sleeping at all.

I ended up ordering 3 x HGST 6TB drives when a Newegg deall came through for $750. Eventually, I wanted to move all my 'day to day' storage needs over to these drives. Although I loved the simplicity of OMV, I was really itching to move away from madam + ext4 if possible. BTRFS seemed to fit the bill for features I was looking for. File aware redundancy, checksumming, compression, snapshots, etc. But the OMV maintainer seemed to be rather opposed to adding support for it into the gui at the moment, citing stability issues. Maybe rightfully so since OMV uses a Debian base.

I then found RockStor, a CentOS based BTRFS NAS distro. Kernel 3.19 was right around the corner which added support for raid5/6 scrubbing and checksumming. I began playing around with RockStor via bare metal and as a Proxmox guest. My testing occured over the course of about 2 months. I had a couple hard locks early on, mostly when virtualizing RockStor. But as new kernels for both Proxmox and RockStor came out, those issues went away. I tested the hell out of BTRFS/RockStor. Pulled drives, copied over and scrubbed literally terabytes and terabytes worth of data. After I felt I couldn't test anymore and I was getting consistent results with no data loss, I decided to move forward with RockStor. (Which btw, I had no data loss at all during any of my tests)

What I ended up going with was Proxmox as the host. Rockstor and OpenMediaVault for storage backends. I retained my original OMV array and moved it over. I figured I'd keep this around and backup family photos just in case BTRFS failed me in some way. Now, it's not generally best practice to virtualize your storage, but it was something I had been doing for a long time now. I felt comfortable with it and understood the issues that can arrise from such a setup. Plus, it's a homelab, so I wanted to tinker.

Because I was going to be adding a proper hypervisor to the mix, I also wanted to play around with migrations on Proxmox. This meant making the storage system a node in the Proxmox cluster and mounting shared volumes on the storage node. Presumably from the same virtual machines that the storatge node would be in control of. Luckily, I was able to easily solve this issue by putting a 'sleep 60; mount-a' line in the rc.local file. This allowed the Proxmox storage node to boot, start up RockStor and OMV, wait 60 seconds until their boot finishes and then mount the virtual machine disk volumes from the fstab. It worked great.

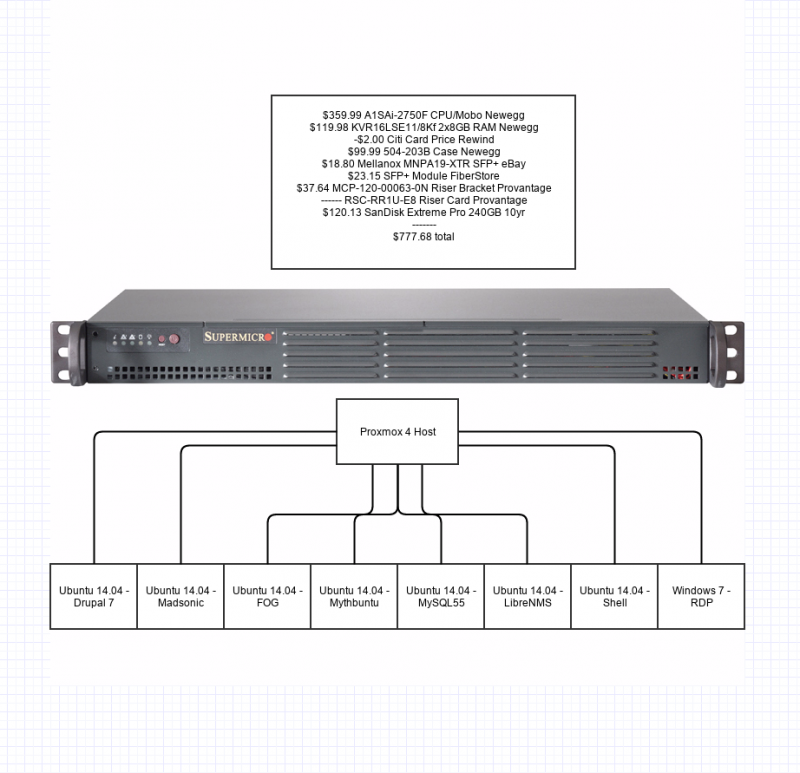

For my hypervisor, I opted to go with a Supermicro c2750 board in a small 1U shallow depth Supermicro case. This was practically idential to the pfSense box I built in size. For now, I stuffed 16gb of Ram in it with a Sandisk Extreme Pro 240GB SSD(10yr warranty) for boot. This thing is crazy fast and sits idle most of the time with the virtualzation load I have. This box also received a Mellanox 10GB SFP+ card. The SFP+ side of connectivity is directly connected, but will be switched once 4x10 SFP+ units come down in price. It also sports 2 small Noctua fans that blow over the CPU silently. I practically can't hear this sytem at all.

Here's a look at the final virtual machine configuration I ended up with. About 12 of the 16gb's of ram has been provisioned to virtual machines currently. Once KSM kicks in after about a day or so, I'll usually sit around 9gb or so of total ram utilization. Gives me a litte headroom for a couple more virtual machines if need be. Although I'll probably double the ram in the coming months anyways.

So what type of power numbers do these rigs put out in their final form. Well, I hooked up a Kill-A-Watt to find out. The following readings were what I found. I measured the systems off after IMPI finished booting. Then I took the rough average of watts during a cold boot, making note of the peak wattage I saw. And a reading after the boot process completed and the system was as idle as it would ever be.

Supermicro A1SRM-2558F (pfSense)